Hi all,

I’m having recent trouble trying to read netCDF files in Python using xarray on my ARE gadi session (Jupyter Notebook). I think it’s an environment/package issue. The line that it struggles with is:

ds = xr.open_mfdataset(yearly_list, engine="netcdf4", chunks={"time": 1200, "realization": -1, "lon": -1, "lat": -1}, combine="by_coords", parallel=True)

Here is the error:

---------------------------------------------------------------------------

ImportError Traceback (most recent call last)

Cell In[6], line 2

1 # Near-surface air temperature

----> 2 tas = readData(year_start, "tas", model_code, year_end)['tas']

3 # Downwelling shortwave radiation

4 rsds = readData(year_start, "rsds", model_code, year_end)['rsds']

Cell In[3], line 16, in readData(year_start, var, model_code, year_end)

11 yearly_list = [

12 file for file in yearly_list

13 if (match := re.search(r'(\d{4})', file)) and year_start <= int(match.group(1)) <= year_end

14 ]

15 # Chunking with Dask

---> 16 ds = xr.open_mfdataset(yearly_list, engine="netcdf4", chunks={"time": 1200, "realization": -1, "lon": -1, "lat": -1}, combine="by_coords", parallel=True)

18 print(full_path)

19 return ds[['lon', 'lat', var]]

File /g/data/xp65/public/apps/med_conda/envs/analysis3_edge-25.03/lib/python3.11/site-packages/xarray/backends/api.py:1642, in open_mfdataset(paths, chunks, concat_dim, compat, preprocess, engine, data_vars, coords, combine, parallel, join, attrs_file, combine_attrs, **kwargs)

1637 datasets = [preprocess(ds) for ds in datasets]

1639 if parallel:

1640 # calling compute here will return the datasets/file_objs lists,

1641 # the underlying datasets will still be stored as dask arrays

-> 1642 datasets, closers = dask.compute(datasets, closers)

1644 # Combine all datasets, closing them in case of a ValueError

1645 try:

File /g/data/xp65/public/apps/med_conda/envs/analysis3_edge-25.03/lib/python3.11/site-packages/dask/base.py:662, in compute(traverse, optimize_graph, scheduler, get, *args, **kwargs)

659 postcomputes.append(x.__dask_postcompute__())

661 with shorten_traceback():

--> 662 results = schedule(dsk, keys, **kwargs)

664 return repack([f(r, *a) for r, (f, a) in zip(results, postcomputes)])

File /g/data/xp65/public/apps/med_conda/envs/analysis3_edge-25.03/lib/python3.11/site-packages/xarray/backends/api.py:686, in open_dataset()

674 decoders = _resolve_decoders_kwargs(

675 decode_cf,

676 open_backend_dataset_parameters=backend.open_dataset_parameters,

(...)

682 decode_coords=decode_coords,

683 )

685 overwrite_encoded_chunks = kwargs.pop("overwrite_encoded_chunks", None)

--> 686 backend_ds = backend.open_dataset(

687 filename_or_obj,

688 drop_variables=drop_variables,

689 **decoders,

690 **kwargs,

691 )

692 ds = _dataset_from_backend_dataset(

693 backend_ds,

694 filename_or_obj,

(...)

704 **kwargs,

705 )

706 return ds

File /g/data/xp65/public/apps/med_conda/envs/analysis3_edge-25.03/lib/python3.11/site-packages/xarray/backends/netCDF4_.py:666, in open_dataset()

644 def open_dataset(

645 self,

646 filename_or_obj: str | os.PathLike[Any] | ReadBuffer | AbstractDataStore,

(...)

663 autoclose=False,

664 ) -> Dataset:

665 filename_or_obj = _normalize_path(filename_or_obj)

--> 666 store = NetCDF4DataStore.open(

667 filename_or_obj,

668 mode=mode,

669 format=format,

670 group=group,

671 clobber=clobber,

672 diskless=diskless,

673 persist=persist,

674 auto_complex=auto_complex,

675 lock=lock,

676 autoclose=autoclose,

677 )

679 store_entrypoint = StoreBackendEntrypoint()

680 with close_on_error(store):

File /g/data/xp65/public/apps/med_conda/envs/analysis3_edge-25.03/lib/python3.11/site-packages/xarray/backends/netCDF4_.py:414, in open()

399 @classmethod

400 def open(

401 cls,

(...)

412 autoclose=False,

413 ):

--> 414 import netCDF4

416 if isinstance(filename, os.PathLike):

417 filename = os.fspath(filename)

File /g/data/xp65/public/apps/med_conda/envs/analysis3_edge-25.03/lib/python3.11/site-packages/netCDF4/__init__.py:3

1 # init for netCDF4. package

2 # Docstring comes from extension module _netCDF4.

----> 3 from ._netCDF4 import *

4 # Need explicit imports for names beginning with underscores

5 from ._netCDF4 import __doc__

ImportError: /g/data/xp65/public/apps/med_conda/envs/analysis3_edge-25.03/lib/python3.11/site-packages/netCDF4/../../../././libucc.so.1: undefined symbol: ucs_config_doc_nop

I’ve tried loading conda/analysis3-25.03 and conda/analysis3-25.11 and I get the same issue. I also get the same issue if I use h5netcdf instead of netcdf4 to read the netCDF file. Has the conda environment changed perhaps? Please let me know if there’s more information I can provide.

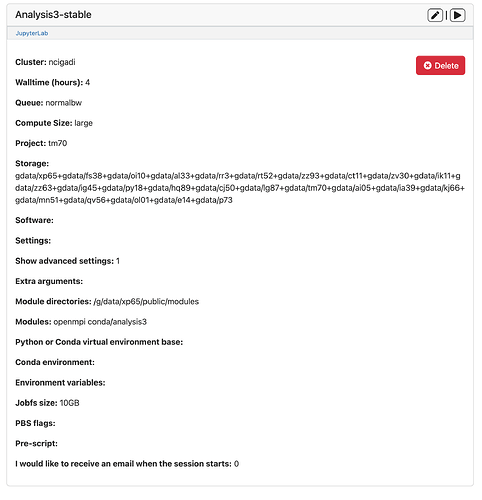

The loaded modules in my ARE session are:

Currently Loaded Modulefiles:

1) singularity 2) conda/analysis3-25.03 3) pbs

Cheers,

Joel